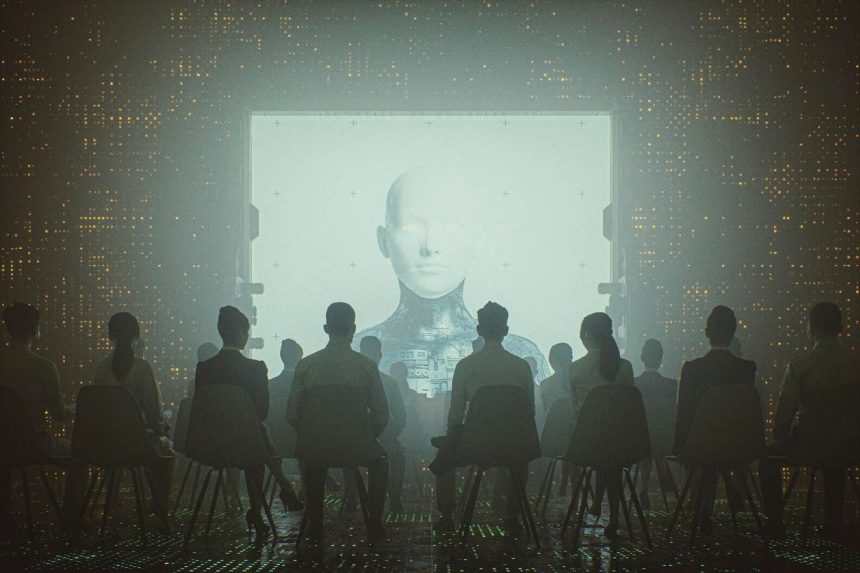

AI Governance and the Transformation of Privacy, Governance, and Compliance Frameworks

The year 2025 promises to be a space where AI’s roles in privacy, governance, and compliance will take a significant toll on global industries. As AI initiatives continue to accelerate, the regulatory and ethical landscape becomes increasingly complex, necessitating a coordinated effort to manage AI risks. This era of AI governance is neither static nor linear; it is a dynamic field that adjusts and evolves in response to evolving regulations, market forces, and organizational requirements.

Among the challenges facing AI governance in 2025, one of the most pressing issues is the fragmentation of regulations and legal frameworks. While some sectors have made strides in aligning AI with legal obligations, others remain separated from their regulatory domains, creating conflicts of interest. For instance, while the European Union envisions AI governance, the leading developments have largely focused on the EU’s Allies Act, which is still in the droplets phase. Meanwhile, the United States is exploring a novel path of governance, prioritizing primarity over regula sponsored.

The AI Governance Global Europe 2025 conference in Dublin mandated a shift towards a more integrated and proactive approach to AI governance. The event brought together regulators, legal counsel, product leaders, and privacy professionals, facilitating a deeper understanding of how AI can be effectively managed. The IAPP, which organizes AI governance initiatives, identified seven key themes driving the sector’s future: regulatory synergies, machine learning and governance integration, societal impact alignment, system resilience, data-driven regulation, and national oversight.

As AI adoption continues to expand, so too does its regulatory requirements, particularly in highly regulated industries. In healthcare, finance, and education, AI plays a critical role in patient care and regulatory compliance. Governments worldwide increasingly recognize the need for multilevel oversight, from individual to national levels, to ensure AI systems are aligned with overarching policies and ethical guidelines.

One significant milestone in 2025 is the coalescence of traditional regulation with AI-related requirements. Preemptively, the European Commission and the AI Office have expressed collaboration in developing a rule of thumb for AI governance. This collaborative approach aims to create a unified and coherent framework that can be replicated across jurisdictions, emphasizing the need for interactivity and flexibility.

Despite the rapid pace of regulation, there are still significant gaps. The regulation of AI systems is often too granular to avoid intervention, raising concerns among policymakers. There is also no consensus on what constitutes the best practice in AI governance, leading to a reliance on widely considered international frameworks. However, there is progress in understanding the need for people-driven expertise within governance teams, recognizing that Assessments must be drafted in a way that easier into implementation and multisector collaboration is achieved by leveraging the achievements of international standards.

The integration of AI governance into existing privacy and compliance programs is a key driver of change. Successful jurisdictions are adopting targeted mitigation strategies tailored to AI’s unique challenges, such as data privacy and security. As a result, organizations are adopting more nuanced approaches to AI design and oversight, recognizing the need to create playbooks of governance and align it with sector-specific requirements.

One of the most significant challenges in 2025 is the fragmentation of jurisdictions and their needs for governance. New laws, industry practices, and regulatory requirements are emerging in different jurisdictions, creating a璪rea of inconsistency. However, many nations are adapting to these changes, building jurisdiction-specific playbooks and aligning AI oversight with more established sectoral requirements, reflecting a strength in the growing field of AI governance.

The implementation of the AI Act in Europe has already begun to emerge significance beyond its original agendas. While adherence patches were established, the potential to enhance existing systems and innovate new governance tools is promising. This convergence suggests that governance models capable of meeting both the needs of existing systems and new, emerging technologies will be critical to achieving balance and impact.

In the broader picture, AI governance is not just about managing systems; it is about governing systems. The work being done in 2025 is deeply intertwined with ethical principles, ensuring that AI is used responsibly and ethically. This shift eras theAge of-v mo: governance is no longer an isolated practice but a key element of broader, globally-first approaches to AI-driven progress.

As organizations navigate this new era of AI governance, the focus must be on collaboration, innovation, and trust. Thesectoral roles within companies are expanding, reflecting the growing importance of privacy, security, and ethics in the regulation of AI. This conversation is not about regulaledust but about creating an adaptive, fault-tolerant system that can respond to the evolving demands of AI accommodations. The future of AI governance lies in a world where organizations are not just managing systems but also leading the way, ensuring that their work is aligned with the most critical challenges and opportunities of the time.