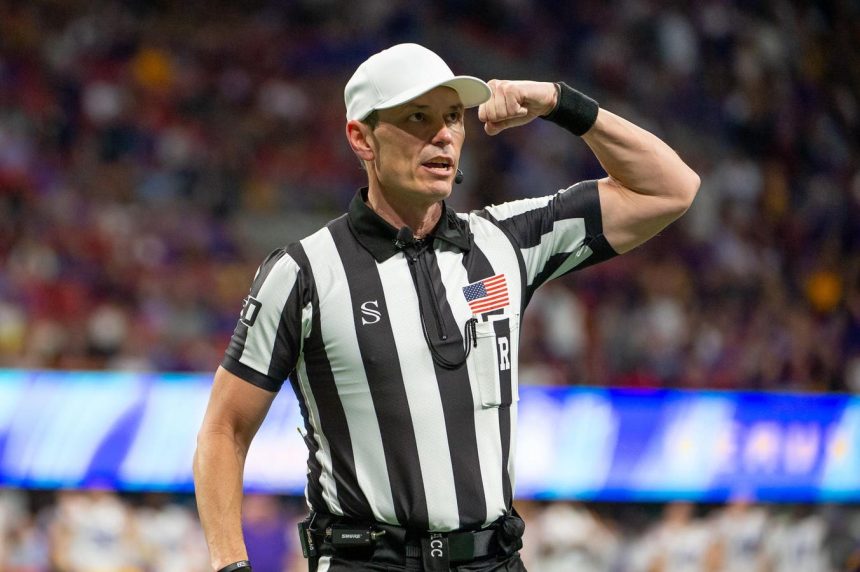

The 2023 College Football Playoff quarterfinals ignited a renewed debate about the controversial targeting rule, particularly following a crucial no-call in the double-overtime Peach Bowl between Texas and Arizona State. This contentious moment, where a hard hit on an Arizona State receiver went unpenalized despite appearing to meet the criteria for targeting, swung the momentum of the game and ultimately contributed to Texas’ victory. The incident highlighted the inherent subjectivity in applying the targeting rule and fueled discussions about the potential for artificial intelligence (AI) to bring greater consistency and objectivity to officiating. The outcry, notably from Big 12 Commissioner Brett Yormark, echoed a growing sentiment within the sport for a more standardized approach to this complex and game-altering penalty.

The NCAA defines targeting (Rule 9-1-4) as any action where a player intentionally aims to strike an opponent with forcible contact to the head or neck area, exceeding the bounds of legal tackling or blocking. Introduced in 2008 as a 15-yard penalty, the rule was significantly strengthened in 2013 with the addition of mandatory ejection. This ejection, impacting both the current game and potentially the following one, underscores the severity of the infraction and its intended purpose of enhancing player safety. The rule aims to deter dangerous plays that increase the risk of concussions and other serious head and neck injuries. Despite the clear intent, the application of the rule remains a source of ongoing contention.

The controversy surrounding targeting stems from the significant consequences of a call, combined with the subjective interpretation required by officials. While the rulebook outlines specific indicators of targeting, such as launching, leading with the helmet, or lowering the head before impact, the practical application often involves nuanced judgments about intent and the force of contact. This inherent subjectivity opens the door to inconsistencies, with different officiating crews potentially reaching different conclusions on similar plays. This variability raises concerns about fairness and the potential for game-changing calls to be influenced by human error or biased perspectives. The Peach Bowl incident exemplifies this perfectly, showcasing how a crucial no-call can shift the trajectory of a game and spark widespread debate about the consistency and fairness of officiating.

Other sports, recognizing the challenges of human subjectivity in critical calls, have begun exploring AI-driven solutions. FIFA’s introduction of the Video Assistant Referee (VAR) in 2018, followed by its adoption in the English Premier League, aimed to provide referees with additional evidence for objective decisions on key moments like offside, handball, and fouls. While intended to reduce incorrect calls, VAR has also generated its own share of controversies, criticized for disrupting game flow and occasionally altering outcomes in contentious ways. Major League Baseball (MLB) has experimented with an automated ball-strike system (ABS) at the Triple-A level, testing both fully automated and challenge-based approaches. While both systems offer a potential path towards greater accuracy and consistency, they also present challenges related to player-specific strike zones and the acceptance of automated judgments in a traditionally human-driven sport.

The potential benefits of AI in officiating are clear. Advanced algorithms, trained on vast datasets of game footage, could potentially identify targeting infractions with greater speed and consistency than human officials. Computer vision technology can analyze individual frames of video, detecting subtle cues related to player movement, impact force, and head positioning that might be missed by the human eye. This could eliminate some of the inconsistency and human error that currently plague targeting calls. However, significant challenges remain before AI can be realistically deployed in high-stakes college football games.

The development of effective AI officiating systems requires massive amounts of labelled data, meaning that every play in the training dataset must be meticulously tagged by humans to identify instances of targeting. This process is time-consuming and resource-intensive. Furthermore, AI algorithms must be capable of real-time processing to keep pace with live game action, demanding substantial computing power and low-latency video feeds. Beyond the technical hurdles, there are also cultural barriers to overcome. Fans, players, and administrators may be resistant to ceding control over crucial game decisions to machines, especially in a sport where human judgment and interpretation have always played a central role. The historical shift from computer rankings in the BCS era to the human selection committee of the College Football Playoff highlights this preference for human oversight.

While AI offers a tantalizing vision of a future where targeting calls are standardized and consistent, the reality of implementation remains a distant prospect. Significant technical advancements, substantial financial investments, and a shift in cultural acceptance are all necessary prerequisites. Should the college football community choose to embrace AI in officiating, it will require a collaborative effort involving universities, conferences, technology providers, and broadcasters. This investment, however, could ultimately lead to a fairer and less controversial game, minimizing the impact of subjective officiating on outcomes and shifting the focus back to the athleticism and strategy on the field.